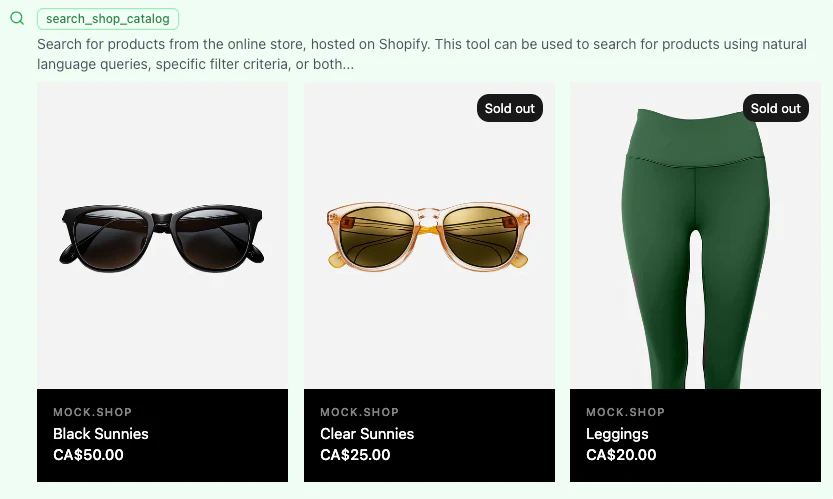

Shopify lit the fuse on MCP UI with their “MCP UI: Breaking the text wall with interactive components” blog post, published on August 5, 2025 (shopify.engineering).

That post lays out how MCP UI extends the Model Context Protocol to return embedded interactive components. As Shopify explains: “Commerce UI is deceptively complex […] we thought, what if MCP could return not just data, but fully interactive UI components?”

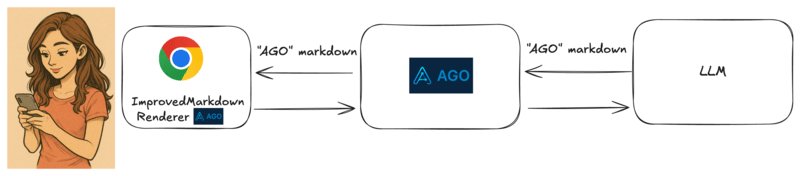

The problem before MCP UI

Traditional LLM-driven UIs rely on:

- Extended markdown (with custom tags or placeholders)

- Search-and-replace patterns (embedding videos, links, or components)

- Component injection (turning placeholders into React/HTML components)

But these methods have two drawbacks:

- LLMs struggle to generate complex UIs reliably, often producing incomplete or malformed instructions. Unlike function calls, which have a clear structure, UI generation is more free-form and error-prone.

- Rendering UIs on the frontend is tightly coupled to the specific backend and frontend implementations preventing reuse across third parties applications.

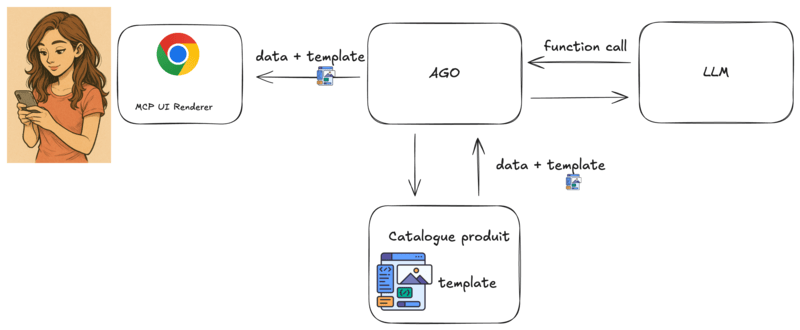

How MCP UI renders complex third party UIs reliably

Instead of incomplete instructions, MCP UI returns a fully generated HTML component that the frontend can display directly.

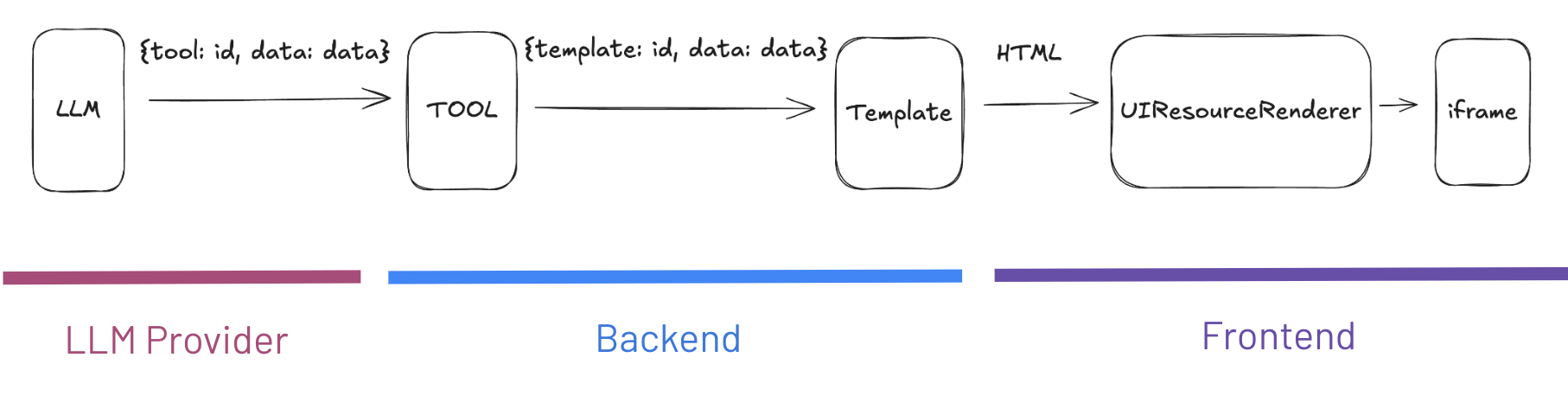

If we detail the process:

- The LLM selects a tool to call with appropriate data, for example: DisplayProduct({ product_id: 22 })

- The backend get the template linked to the tool.

- Then it renders the HTML using a templating engine like Jinja.

- The output is sent to the frontend as a complete HTML string of the component.

- On the frontend, this HTML is rendered inside a sandboxed iframe for security and stability using a MCP UI renderer that you can find in github

<iframe

srcDoc={htmlString}

sandbox={sandbox}

style={{width: config[0], height: config[1], ...style}}

title="MCP HTML Resource"

{...iframeProps}

ref={iframeRef}

onLoad={onIframeLoad}

/>

With this architecture, Third parties can host their own MCP UI servers, enabling a decentralized ecosystem of interactive components. For example, a payment processor could host a checkout component that any LLM-powered app could embed. Philosophically, this is similar to how MCP (Model Context Protocol) enables decentralized data retrieval.

It also enables richer interactions like sorting and filtering, things Markdown can’t handle. This complements LLMs well, since they’re notoriously poor at sorting long lists by generated criteria.

Why This Matters for Commerce

AI assistants like ChatGPT and Perplexity are moving into e-commerce. They’re no longer just answering questions, they’re becoming storefronts!

Text-only chat is insufficient for retail. Customers expect:

- Product images

- Size and color selectors

- Dynamic pricing and availability

- Interactive shopping carts

MCP UI delivers exactly that: immersive shopping experiences directly inside AI assistants.

If your backend doesn’t support something like MCP UI you’re about to lose share to competitors who let customers “shop” directly through AI.

Customer Service With Actions

Beyond shopping, MCP UI also transforms customer service. Instead of static responses, assistants can present actionable UI components:

- Buttons to reschedule a delivery

- Forms to request a return or refund

- Interactive troubleshooting flows with dynamic updates

- Secure payment or authentication prompts

This shifts customer service from being reactive and text-heavy into a guided, interactive experience where problems get solved inside the conversation itself. The assistant is no longer just a support agent—it’s an operational front-end able to trigger real backend actions seamlessly.

This is exactly what we do at AGO with our MCP UI integration. Our AI agents create dynamic, interactive UIs that let customers solve their issues directly within the chat.

Ready to transform your customer support with AGO's MCP UI system? Book a demo and see how we can help revolutionize your customer experience.